Do Not Open That Trojan Horse

In early September of 1940, a special unit of six senior British military officers and researchers met at London Euston Station with a highly guarded secret. They were transporting a black metal box that was later brought to Liverpool and then handed over to the US. Inside was a cavity magnetron, the latest British military technology that promised to be the end of World War II. After the fall of France, the British government had became increasingly alarmed about a possible Nazi occupation. In such an event, the Nazis might seize any inventions that had not yet been produced due to the lack of adequate resources and unfavorable conditions in the UK and use them to immediately strengthen their military position. Hence, after early preparations by Henry Tizard, who was the main advocate of the mission, and with the approval of Prime Minister Churchill, who hoped to support the Alliance, the Tizard Mission was approved to further increase military technology cooperation among the Allies.

Years later, that little box inspired a metaphor used in cybernetics, a field concerned with control and feedback that emerged in the 1960s. To paraphrase the founder of cybernetics, Norbert Wiener, a black box is an apparatus that operates with visible inputs and outputs, but without revealing anything about the inner structure that makes such operations possible.[1] Cybernetics has had a profound effect on today's technologies, especially artificial intelligence, in which the concept of the black box is especially evident. In contrast to its successful debut in the Tizard Mission, the black box is rather the subject of considerable criticism in relation to AI.

Media theorist Alexander Galloway defines two types of black boxes. The first type, the black box cypher, reminiscent of the black box in the Tizard Mission, is a cloaked node with no external connectivity. The second type, which Galloway calls the black box function, grants access, but only selectively and according to specific grammars of action and expression.[2] The black box function is a better expression of our cybernetic digital age. It seems to be open in a sense, offering an inviting interface to interact with. This interaction, however, obscures the fact that what has been granted is only the functioning of the black box, not its decoding. It appears to reveal its inner structure but only leaves us with a more detailed, interactive, and yet impermeable surface. We modulate Photoshop using GUI (graphical user interface), and we operate Microsoft Windows using a keyboard and mouse. The more we seem to learn about their complex inner workings, the more we are restrained by the human-machine interaction.

The black box was invented to divert attention with an ordinary exterior, to obscure with limited inputs and outputs, and to encrypt secrets with overly complex inner structures. That is why, ever since the birth of the metal box, there have been calls to open it. Or perhaps much earlier, since the demand for transparency and the disdain for hidden agendas permeating life in every sector of society. We become frustrated when we lack the ability or the right tools to understand something built with our own knowledge, and we become even more worried when we realize that it may someday have the potential to be somehow out of our control. Opening the formidable black box seems to be the only choice left.

The urge to “open the black box” has peaked in recent years with the widespread use of AI. In Europe, for example, the General Data Protection Regulation (GDPR) mandates the right to explanation. It states, “(the data subject should have) the right ... to obtain an explanation of the decision reached.”[3] The reason why there is so much concern about AI functioning as a black box is that the complexity of a neural network will impede any attempt to investigate and correct the flaws from which ethical issues and false decisions might emerge. Despite its military origin and its strong connection to cybernetics and machine learning, the term “black box” is by no means technical jargon. It is no less important in disciplines such as philosophy. In “Opening Pandora's Black Box,” the introduction in his book Science in Action, Bruno Latour gives an example of how a scientific concept can be a kind of black box. Revisiting the story of the DNA helix, he suggests that this concept is so widely accepted as an axiom that it is the default for all biological research, and scientists will never attempt to open this socially constructed black box.[4] Hence, once a black box is established and the critical voices recede, we lose interest in opening it.

Unfortunately, all attempts to open the black box, whether in machine learning or in deconstructing knowledge production, remain at best a noble wish. This is why the corresponding regulation in the GDPR has been the subject of so much debate, because the overly broad scope of so-called automated decisions could neutralize the right to explanation.[5] Moreover, fully understanding a neural network is nearly impossible. In a similar vein, Langdon Winner argues in his essay “Upon Opening the Black Box and Finding It Empty” that the effort to unpack technology and look inside the black box is superficial.[6] Although such a reading seeks to think of technology as a social construction, this method, for instance, lose sight of marginalized groups who have no voice in technology and yet are profoundly affected by it. When Winner says that the black box is empty, he is warning us that understanding technology alone does yield sufficient social benefits. On the contrary, what we should do is reconstruct technology.

Following Winner, is there any other way to make use of the black box other than opening it as we instinctively would like to do? Can we reconstruct the black box? A timely reminder comes from Kavita Philip. In her so-called postcolonial computing, Philip offers several ways to contemplate current technology through a postcolonial lens. One of the tactics Philip proposes is to “investigate its (technoscientific object's) contingency not only locally but in the infrastructures, assemblages, and political economies that are the conditions of its possibility.”[7] In other words, instead of taking technology and the culture surrounding it for granted, we should ask what milieu it comes from and what makes us accept it as natural.

Now, if we temporarily shift our attention from the black box to the call to open the black box, some questions arise. Who, or which social class, most strongly supports opening the black box? In which countries is this ethos most widely accepted? What is the social, cultural, and historical background? Is it merely a coincidence that opening the black box corresponds to surveillance capitalism’s drive for information totality?[8] What if the discourse of opening the black box is based on Western and even imperialist values? Moreover, if we consider the impulse to open the black box to be the ideological expression of the dominant class that possesses the resources and technology, should we question the issue from the perspective of the weak? If we are going to be rebellious, how about considering alternative ways to use it, for instance, by keeping it closed?

In human history, there have been other metaphors for keeping secrets other than the black box. There has been Pandora's box and the Trojan Horse, both vessels of subversive and rebellious power. It is worth asking why Latour chose Pandora's box rather than the Trojan Horse as a metaphor to illustrate his reflections on the DNA helix. We haven't yet considered what we can encode into a black box and when the appropriate time to open it might be. What distinguishes the Trojan Horse from Pandora's box is the nature of the power within – the potential for evil that could destroy human civilization, or the resolve needed to overcome tyranny. While Pandora's box reminds us of the danger of opening a secret carelessly, the Trojan Horse is an example of the kind of secret one keeps until the right time. In her notorious essay “Trojan Horses: Activist Art and Power,” published in 1984 in Art After Modernism, Lucy Lippard associates the Trojan Horse with activist art. [9] In her account, activist art unpacks itself within the art institution, masquerading as an aesthetic object or event. For Lippard, the crucial point of the Trojan Horse metaphor is that art and aesthetic production, as well as the activist movement, originates outside of a community or institution and moves inside. It acts under the enemy's skin, right in front of those who need to be subverted. Hence, staying inside the black box is not the choice of a coward, but a tactical method – a way to challenge the existing regime.

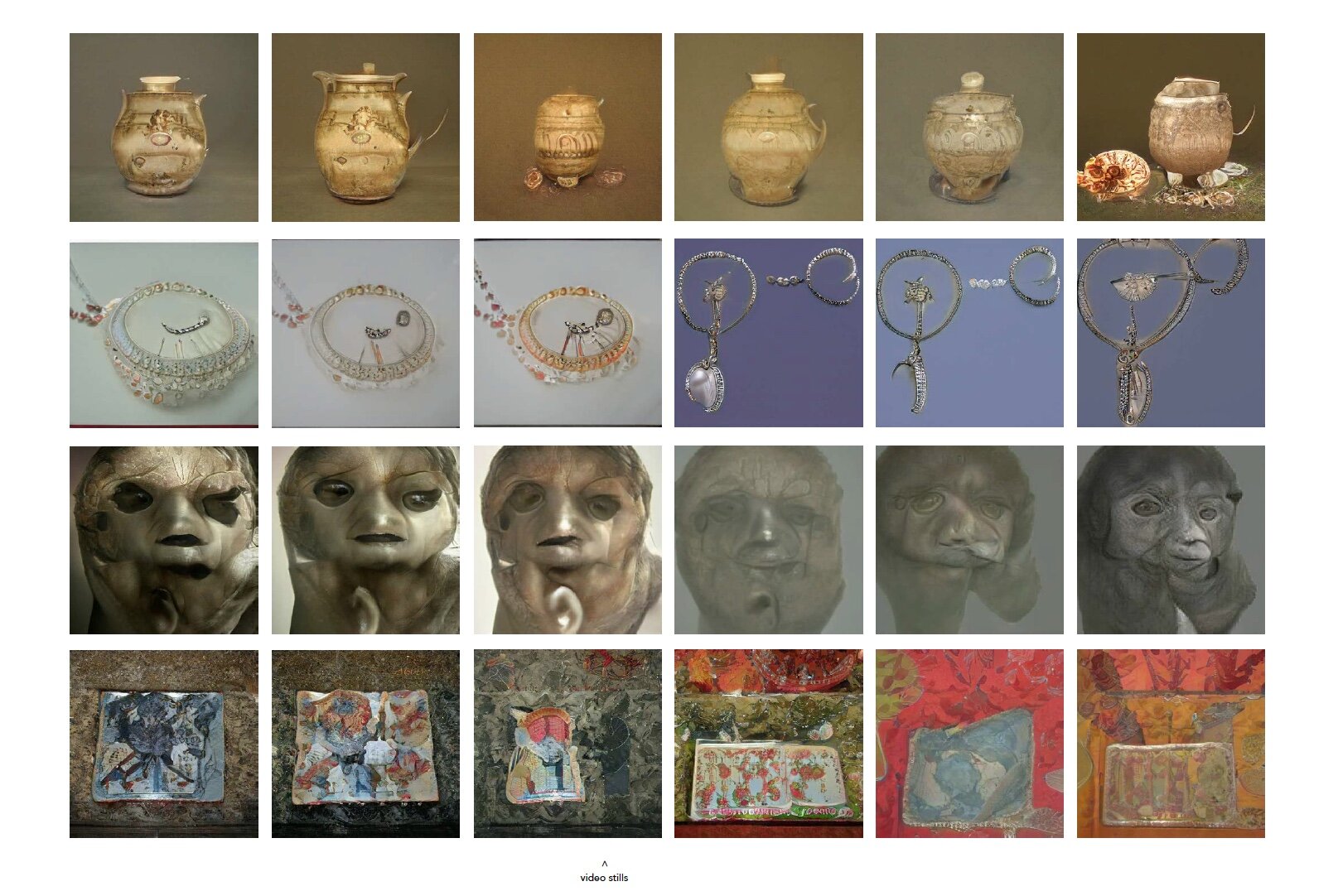

Recently, the theft scandal at the British Museum was the perfect opportunity to revisit Nora Al-Badri's work “Babylonian Vision” (2020). For a long time, museum collections have been in the spotlight for their relationship to colonialism and imperialism. Often these cultural artifacts are relegated to glass cages in the name of preservation, and the fact that they were torn from their homeland and original contexts is not given sufficient attention. But in the meanwhile, calls for the return of these artifacts continue.[10] Aside from the physical artifacts, another tough issue to resolve involves the copyrights of digitized materials and documents. To address the contentious ownership rights of digital cultural heritage, Nora trained a neural network on more than 10,000 images of Mesopotamian, Neo-Sumerian, and Assyrian artifacts, which she acquired legally and illegally from five different museums. Though she asked for permission from these museums in advance, only two of them provided access to their open API.

The advantage of using deep learning is that, because AI functions like a black box, these newly generated deepfake artifact images are now unrecognizable from their original “parents.” Ironically, the black box in GAN (generative adversarial network) poses a challenge to institutions that serve as a continuity of imperialism and colonial exploitation. It is because of the black box in AI that those institutions can no longer claim their controversial ownership. Ownership thus leads to the current predicament whereby the ownership of one's own culture cannot be decided by one's own people, but by historically dominant countries and individuals. Nora's black box isn't meant to hide, or at least not entirely, but to open, to liberate one's culture from the prison of disciplined, institutionalized cultural institutions. In the same way that what we put in a black box can make it either into Pandora's box or a Trojan Horse, to cite Al-Badri's own words “what is at issue is the meaning that we give to data.”[11] In this case, Robin Hood’s digital black box is more emancipatory than simply a problem of ethics.

Today, a black box can do more than simply conceal secrets. It is not limited to the class that controls the latest technology or that hoard the most cultural resources. On the contrary, it is a weapon, the Trojan Horse of the digital age, allowing the weak the chance to confound the powerful, to taunt them with an opaque joke until the day when the subversive power can finally be unleashed within. Just like the words inscribed on the Golden Snitch in Harry Potter, “I open at the close.” Before the dawn arrives, well, let's keep the black box closed.

Wiener, N., Hill, D. and Mitter, S.K. (2019) Cybernetics: or control and communication in the animal and the machine. Reissue of the 1961 second edition. Cambridge, Massachusetts London, England: The MIT Press, p.285. ↩︎

Galloway, A.R. (2021) Uncomputable: play and politics in the long digital age. Brooklyn, NY: Verso, p.226. ↩︎

Vollmer, N. (2023) Recital 71 EU general Data Protection Regulation (EU-GDPR), Recital 71 EU General Data Protection Regulation (EU-GDPR). Privacy/Privazy according to plan. Available at: https://www.privacy-regulation.eu/en/r71.htm (Accessed: 20 September 2023). ↩︎

Latour, B. (2015) Science in action: how to follow scientists and engineers through society. Nachdr. Cambridge, Mass: Harvard Univ. Press, p.16. ↩︎

See Edwards, L. and Veale, M. (2017) ‘Slave to the Algorithm? Why a Right to Explanationn is Probably Not the Remedy You are Looking for’, SSRN Electronic Journal [Preprint]. Available at: https://doi.org/10.2139/ssrn.2972855. ↩︎

Winner, L. (1993) ‘Upon Opening the Black Box and Finding It Empty: Social Constructivism and the Philosophy of Technology’, Science, Technology, & Human Values, 18(3), pp. 362–378. ↩︎

Philip, K., Irani, L. and Dourish, P. (2012) ‘Postcolonial Computing: A Tactical Survey’, Science, Technology, & Human Values, 37(1), pp. 3–29. Available at: https://doi.org/10.1177/0162243910389594. ↩︎

Zuboff, S. (2019) The age of surveillance capitalism: the fight for a human future at the new frontier of power. Paperback edition. London: Profile Books, p. 375 ↩︎

Lippard, L.R., 1984. Trojan horses: Activist art and power. na. ↩︎

For the British Musem missing items and the call of returning, see Hundreds of items ‘missing’ from British Museum since 2013 (2023) The Guardian. Available at: https://www.theguardian.com/culture/2023/aug/24/hundreds-of-items-missing-from-british-museum-since-2013 (Accessed: 20 September 2023) and Wang, F. (2023) China state media calls on British Museum to return artefacts, BBC News. Available at: https://www.bbc.com/news/world-asia-china-66636705 (Accessed: 20 September 2023). ↩︎

All-Badri, N. (no date) The post-truth museum, Open Secret. Available at: https://opensecret.kw-berlin.de/essays/the-post-truth-museum/ (Accessed: 20 September 2023). ↩︎